Introduction

Large Language Models (LLMs) are powerful tools for analyzing and extracting insights from text. However, working with large, messy documents — PDFs, Word files, scanned reports — presents unique challenges.

In this blog, I will walk you through:

- What is Unstructured?

- Why I moved away from PyPDF

- Parsing an 8-page document (Dota 2 heroes guide) using Unstructured

- How chunking improves parsing quality even with the free version

- Limitations of the free version

- Hands-on code examples and outputs

What is Unstructured?

Unstructured is an open-source Python library designed to help you extract text cleanly from documents like PDFs, DOCX, HTML, images, and more.

It comes in two flavors:

Why I Switched to Unstructured After PyPDF

I initially used PyPDFLoader (Langchain's wrapper for PyPDF) for document parsing. It worked well until documents became complex and I began to notice:

Especially with longer PDFs (100+ pages, images, and multiple columns), PyPDF struggled.

After struggling for days, I moved to Unstructured — and immediately saw clean, structured, usable outputs!

Before We Proceed: Key Terms

Elements: When Unstructured parses a document, it outputs a list of Element objects, such as Title, NarrativeText, ListItem, etc.

Chunking: Chunking is splitting a large document into smaller parts ("chunks") to feed into LLMs more effectively. (Example: split by page, heading, or token size.)

STEP 1: Setting up the Project

We are going to use Python. I have covered all the commands you need to quickly setup the project below. Since the blog focuses on Unstructured, I assume you have basic Python knowledge. Please note that I am using Linux, so where necessary you can use the alternative commands for Mac/Windows.

mkdir unstructured-demo

cd unstructured-demo

python3 -m venv venv

source venv/bin/activate

# Install required packages

pip install unstructured "unstructured[pdf]" google-genaiWhy "unstructured[pdf]"?

The basic unstructured installation supports only a couple of file extensions such as html, json etc and does not cover pdf. My document is pdf, so we are going to need unstructured[pdf], thus we can avoid using a larger library as unstructured[all-docs].

What's google-genai?

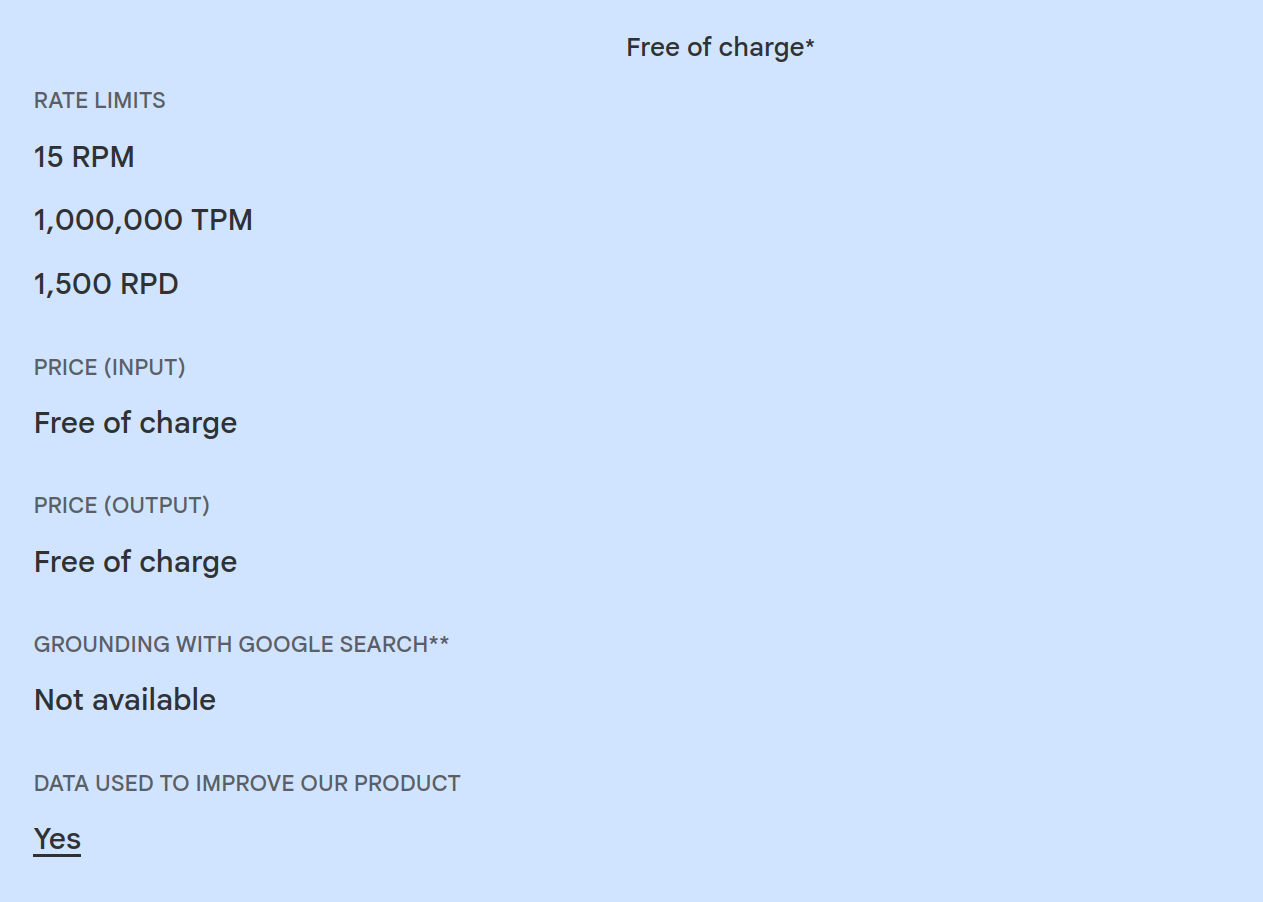

Google AI Studio offers a free plan for using their API. We will be using the LLM to compare outputs later. What's the fun without an LLM, eh?

The python version I initially tried was Python 3.13.2. But I ran into an error while trying to install unstructured[pdf].

ERROR: ERROR: Failed to build installable wheels for some pyproject.toml based projects (onnx)

I tried downgrading to Python 3.11 and then it worked.

Reference: https://github.com/onnx/onnx/issues/4376

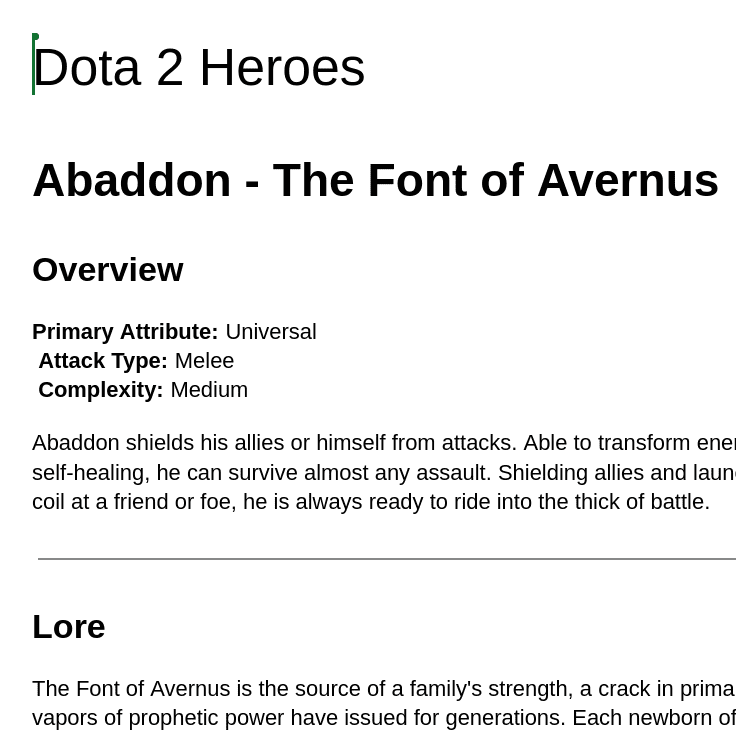

STEP 2: Preparing the document for Parsing

I have prepared a document for this example (content directly taken from the official Dota2 website). It is of 8 pages and it's about two Dota 2 Heroes (Dota 2 is a game and one of my favourites). It is available as a drive link so you can use the same, or you can use your own document.

https://docs.google.com/document/d/1-XjHYMiSa3ek1LXLz0SsUdkAV8qDz9Kl5gfjrUCzNiQ/edit?usp=sharing

Optional: Downloading the Drive Document Programmatically

You can skip this part and just download it manually and place it in your working directory.

Create download_file.py:

import requests

def download_file_from_google_drive(file_id, destination):

URL = f"https://drive.google.com/uc?id={file_id}"

response = requests.get(URL)

if response.status_code == 200:

with open(destination, "wb") as f:

f.write(response.content)

print(f"File downloaded successfully to {destination}")

else:

print("Failed to download file.")

if __name__ == "__main__":

file_id = "1jehJZbVojANGXRpZJKYcT2c05xIlugxO"

destination = "dota2_heroes.pdf"

download_file_from_google_drive(file_id, destination)Run python download_file.py and then you will have a file called dota2_heroes.pdf inside your project.

Google Drive viewer links won't download directly.

The document link is (It is a PDF):

https://drive.google.com/file/d/1jehJZbVojANGXRpZJKYcT2c05xIlugxO/view?usp=sharing

I need to extract the file ID & create a direct download link:

https://drive.google.com/uc?id=1jehJZbVojANGXRpZJKYcT2c05xIlugxO

This way, I can programmatically download it.

STEP 3: Parsing the Downloaded Document

Create parse_doc.py & run python parse_doc.py:

from unstructured.partition.pdf import partition_pdf

doc_path = "dota2_heroes.pdf"

# Parse the document

elements = partition_pdf(filename=doc_path)

# Print parsed elements

for element in elements:

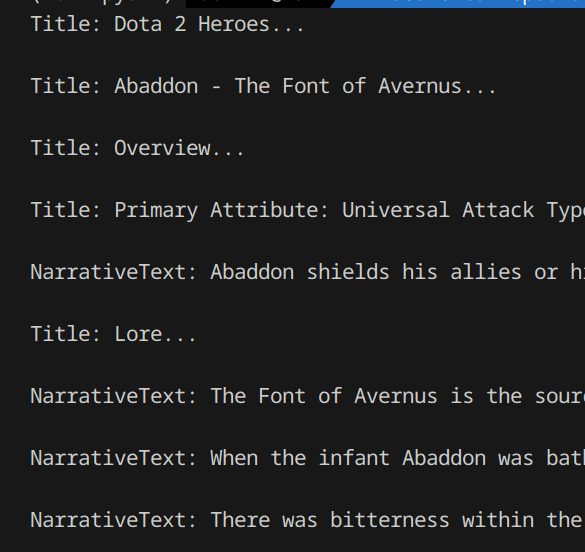

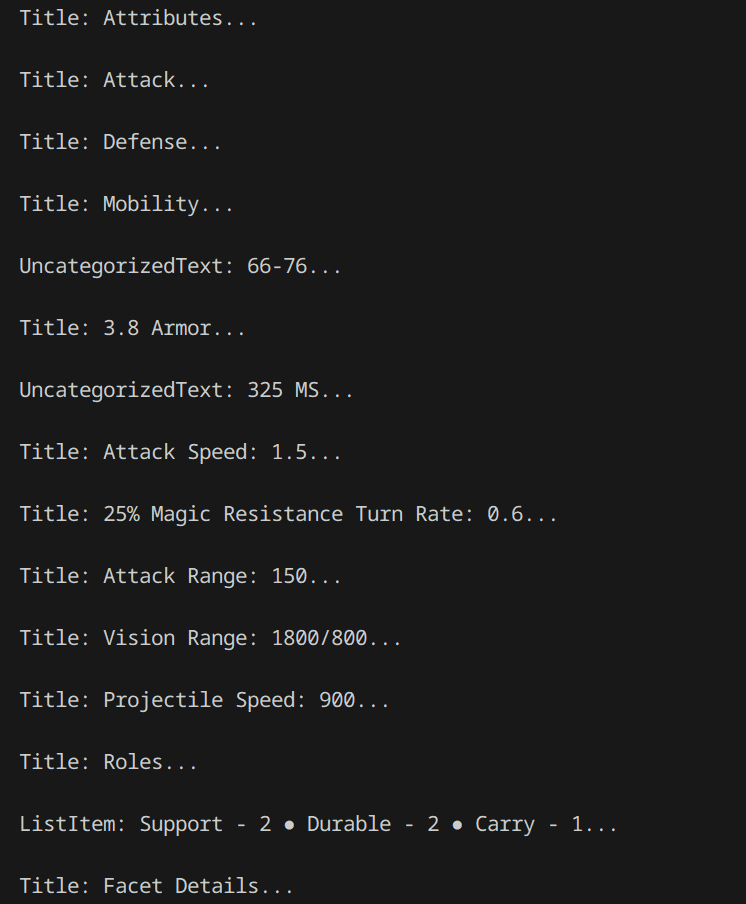

print(f"{element.category}: {element.text[:200]}...\n")Now you can see a summary of each parsed chunk (up to first 200 characters) as output in your terminal. Note how it has identified titles, list items, narrative text, which is quite cleaner compared to raw PyPDF.

Exploring Parsed Elements with Unstructured

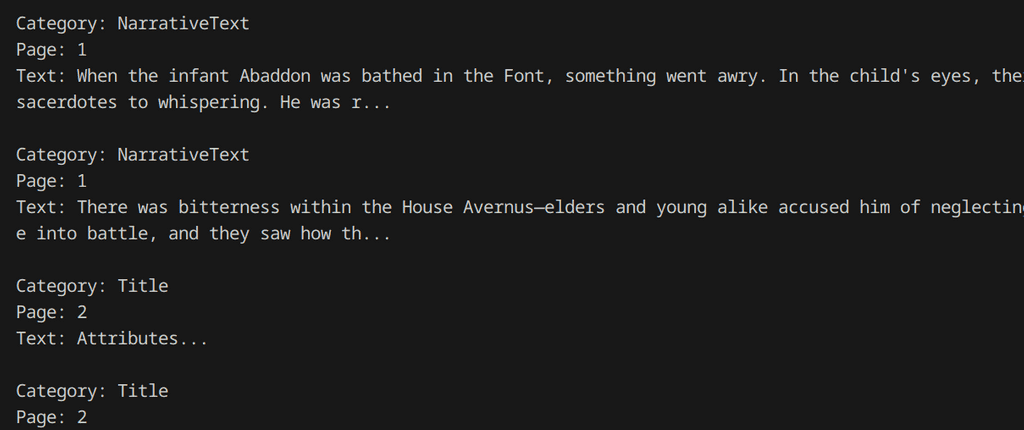

When you run partition_pdf(), Unstructured doesn't just give you plain text — It gives you a list of Element objects, each carrying useful metadata and category information.

Each element has properties like category, text and page_number.

from unstructured.partition.pdf import partition_pdf

doc_path = "dota2_heroes.pdf"

elements = partition_pdf(filename=doc_path, strategy="fast")

for element in elements:

print(f"Category: {element.category}")

print(f"Page: {getattr(element.metadata, 'page_number', 'Unknown')}")

print(f"Text: {element.text[:200]}...\n") # Limit to first 200 characters

print(f"Total number of elements: {len(elements)}")

Why is This Useful?

- You can filter only specific parts easily. (Example: Get all Titles)

- You can analyze page by page

- You can reconstruct a clean structured version of the PDF

- Later, you can build your own custom chunks (like splitting by Title!)

Example

When you have metadata like page_number or a custom document index, you can filter chunks at retrieval time — without re-parsing or manually splitting documents.

For example, in a RAG (Retrieval Augmented Generation) system using Langchain's RetrievalQA, you can apply a filter like this:

from langchain.chains import RetrievalQA

qa_chain = RetrievalQA.from_chain_type(

llm,

retriever=vectorstore.as_retriever(

search_type="mmr",

search_kwargs={

"k": blueprint_k, # How many results to return

"lambda_mult": 0.25, # Balancing relevance/diversity

"filter": {"page_number": 1}, # Filter only relevant docs!

},

),

chain_type_kwargs={"prompt": prompt},

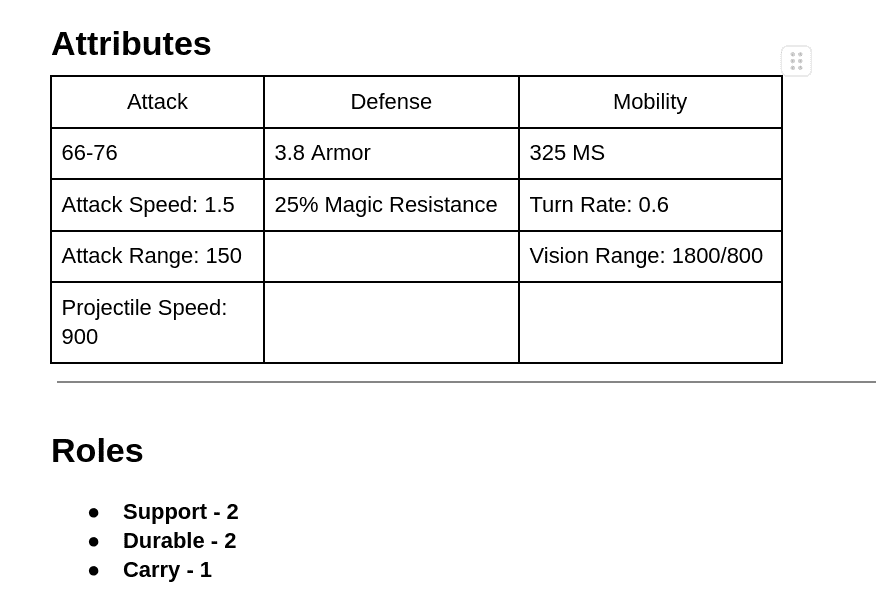

)Limitations: Table Parsing with the Free Library

While the Unstructured library performs well for most structured documents, it currently struggles with complex tables.

As you can see, the row/column structure is lost. This happens because the free library doesn't yet support advanced table detection or OCR alignment. If you like, you can try the Unstructured Paid version and see how it tackles this. They offer a free one week trial currently.

Summary

In this blog, we:

- Set up the project environment

- Parsed a real-world PDF document

- Discussed parsing issues and limitations

- Got a clean, usable text output ready for LLMs

Next Step? 📚

You can try manually grouping up all the chunks so you can later feed into a RAG system, according to different strategies, by still relying on the Unstructured free version. In the next blog, we'll take this parsed data and build different chunking strategies (page-wise, overlapping pages, custom-based) — and compare how it impacts LLM answers!